This post is part of a series describing a demo project that employs various real-world patterns and tools to provide access to data in a MongoDB database for the DevExtreme grid widgets. You can find the introduction and overview to the post series by following this link.

Continuing from my first post about the Event Sourcing branch of the demo project, here are some details about my implementation. For quick reference, here’s the link to the branch again.

Implementation

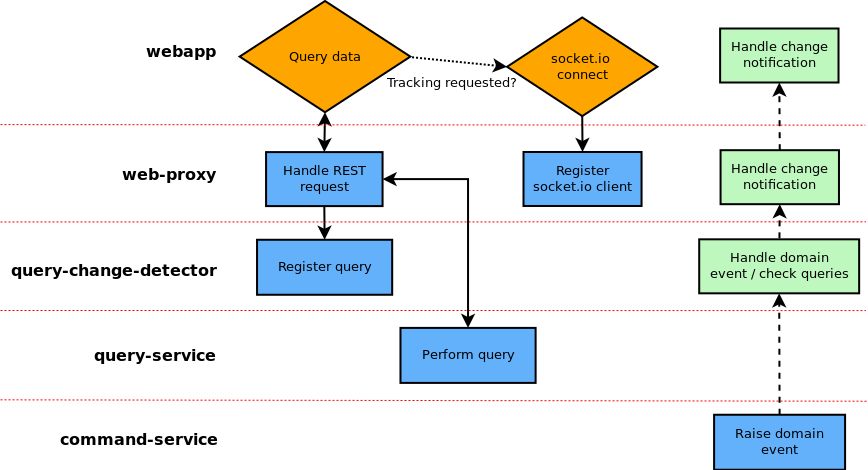

As you can see from the diagrams above, there are several changes to the original structure to incorporate the new architecture and functionality. I will describe first how a new row ends up being persisted in the read model.

Using RabbitMQ

As described in a previous post, my demo application uses Seneca for communication purposes. In the original branch of the demo, I configured Seneca in each service to communicate directly with each other service. While not the most common thing to do in a real-world application, this approach worked just fine with the demo setup.

To support the event features of the new architecture, it is now mandatory to have a communications channels that supports broadcasting events. Seneca itself doesn’t do this, but it has plugable transport channels, some of which support event broadcasting. I configured Seneca to use RabbitMQ with the help of seneca-amqp-transport. Here’s an example of the changed Seneca startup code (from query-service/index.js):

seneca

.use('seneca-amqp-transport')

.use('query-values')

.use('query-events')

.listen({

type: 'amqp',

hostname: process.env.RABBITMQ_HOST || 'rabbitmq',

port: parseInt(process.env.RABBITMQ_PORT) || 5672,

pin: 'role:entitiesQuery',

socketOptions: {

noDelay: true

}

});In my orchestrated service setup, the RabbitMQ service is spun up together with all the others in docker-compose.yml. If you compare the details of this file against the master branch version, you will find that things are actually much simpler now that services only depend on rabbitmq (and sometimes mongo), but not on each other.

The command service

When a new row is created in the front-end, the command service receives a message from the web-proxy. In my new implementation, I have replaced the old command service completely, with code that utilizes the library reSolve. This is a project in development by one of our teams at this time, and I expect to be able to blog more about this in the future – right now there isn’t any public information available.

As the diagram shows, the most important aspect of the command service is that it raises domain events that can be handled by other services. The structure of the events is determined by the simple return statements at the ends of the create and update command declarations (from command-service/index.js):

...

return {

type: 'created',

payload: args

};

...

return {

type: 'updated',

payload: args

};In addition to this, the command service performs very limited local state handling, to enable it to detect whether entities exist already. The event handler for the created event receives the domain event and modifies the local state to reflect the fact that the entity with the given id exists now:

eventHandlers: {

created: (state, args) => ({

exists: true,

id: args.aggregateId

})

}There is also a very basic validation implementation for the create command, simply to make sure that all relevant information is actually supplied:

if (

!args.aggregateId ||

!args.data.date1 ||

!args.data.date2 ||

!args.data.int1 ||

!args.data.int2 ||

!args.data.string

)

throw new Error(

`Can't create incomplete aggregate: ${JSON.stringify(args)}`

);In the case of the demo application, commands can only be sent by specific components of my own system. As such, the validation performed on this level is only a safety net against my own accidental misuse. Business level validation is performed at other points, as I already mentioned in the diagram description above.

The command service is configured to receive commands through Seneca (based on RabbitMQ, see above), and it also publishes events through Seneca. For the latter purpose, I created a bus implementation for reSolve, which you can find in resolve-bus-seneca/index.js. The main important detail is that the RabbitMQ exchange used by the bus is configured as a fanout type, which makes it possible for multiple clients to receive messages through that exchange.

I may decide in the future to publish this bus as an npm package, but at this time it is part of the demo codebase.

The readmodel service

This service is new to the project, and its purpose is to receive domain events through the bus and react by creating and maintaining a persistent representation of the entities in the system. As an example, here is the event handler that creates a new instance (from events.js):

this.add('role: event, aggregateName: entity, eventName: created', (m, r) => {

m = fixObject(m);

const newObject = m.event.payload.data;

newObject._id = m.event.payload.data.id;

db(db =>

db.collection(m.aggregateName).insertOne(newObject, err => {

if (err) console.error('Error persisting new entity: ', err);

r();

})

);

});Query tracking

The second part of the new functionality is the change notification feature. The following steps are performed for this purpose:

- The front-end runs a data query, as before. However, now it passes a parameter that tells the web-proxy to track the query.

- The web-proxy sends a message to the query-change-detector to register the query for tracking.

- Data is queried via the query-service and returned to the front-end. An ID value for the tracked query is also returned.

- With the ID value, the front-end opens a socket.io connection to the web-proxy, which registers the client and connects it with the tracked query by means of the ID.

- At a later point, if the command-service raises a domain event, the query-change-detector receives this and runs tracked queries to detect changes.

- If changes are found, a change notification message is sent to the web-proxy.

- The web-proxy uses the open socket.io connection to notify the front-end of the changes.

- The front-end handles the change notification by applying changes to the visible grid.

Here is another flowchart outlining the steps:

The step of registering the query is very simple. As part of its basic query processing, the web-proxy processes the query parameters passed from the front-end and generates a message to send to the query-service. To register the query for change tracking, that same message object is passed on to the query-change-tracker. You can see this towards the end of the listValues function in proxy.js, and the other side in querychanges.js.

Socket.io is a JavaScript library that facilitates bidirectional communication (similar to SignalR for ASP.NET projects). The web-proxy accepts socket.io client connections in sockets.js, and the startup code of the service has been modified to spin up socket.io (from web-proxy/index.js):

const express = seneca.export('web/context')();

const server = http.Server(express);

const io = socketIo(server);

require('./sockets')(seneca, io, liveClients);

const port = process.env.WEBPROXY_PORT || 3000;

server.listen(port, () => {

console.log('Web Proxy running on port ' + port);

});As part of the query logic in dataStore.js, the front-end application connects to the web-proxy using socket.io:

if (params.live && res.liveId) {

var socket = io.connect(dataStoreOptions.socketIoUrl);

socket.on('hello', function(args, reply) {

socket.on('registered', function() {

store.registerSocket(res.liveId, socket);

socket.on('querychange', function(changeInfo) {

dataStoreOptions.changeNotification(changeInfo);

});

});

reply({

liveId: res.liveId

});

});

}

In query-change-detector/events.js, you can see the handling of incoming domain events. To prevent any delays, events are accumulated in a queue, grouped by entities they pertain to. In a background loop, the query-change-detector then handles the events, possibly re-runs the queries it is tracking, and fires events of its own when changes are detected. There is some handling to prevent overly large numbers of change notifications from being sent in case of bursts of domain events, and various edge case checks depending on the types of the queries.

When the change notification is received by the web-proxy, it uses the existing socket.io connection to send the notification to the front-end (from queryChanges.js):

if (liveClients.hasId(m.queryId)) {

const socket = liveClients.getSocket(m.queryId);

if (socket) {

socket.emit('querychange', {

liveId: m.queryId,

batchUpdate: m.batchUpdate,

events: m.events

});

...

} ...Finally, the front-end client receives the change notification. In changeNotification.js, you can see the code I wrote to apply changes to the Data Grid (function trackGridChanges) depending on the notification. I attempt to merge the changes into the current view of the grid as efficiently as possible.

In contrast, the function trackPivotGridChanges is very much shorter and represents the minimum required implementation by simply reloading the grid – unfortunately the Pivot Grid does not, at this point, support similar granular techniques as the Data Grid.

Try it!

This concludes my description of the new branch. Please try it for yourself and don’t hesitate to get in touch (here or via email) if you have any problems, questions or suggestions!